1. 데이터 로더(Data Loader)

* 데이터 양이 많을 때 배치 단위로 학습하는 방법을 제공

2. 손글씨 인식 모델 만들기

import torch

import torch.nn as nn

import torch.optim as optim

import matplotlib.pyplot as plt

from sklearn.datasets import load_digits

from sklearn.model_selection import train_test_split

device = 'cuda' if torch.cuda.is_available() else 'cpu'

print(device)

digits = load_digits()

X_data = digits['data']

y_data = digits['target']

print(X_data.shape)

print(y_data.shape)

fig, axes = plt.subplots(nrows = 2, ncols = 5, figsize=(14, 8))

for i, ax in enumerate(axes.flatten()) :

ax.imshow(X_data[i].reshape((8,8)), cmap='gray')

ax.set_title(y_data[i])

ax.axis('off')

X_data = torch.FloatTensor(X_data)

y_data = torch.LongTensor(y_data)

print(X_data.shape)

print(y_data.shape)

# torch.Size([1797, 64]) torch.Size([1797])

X_train, x_test, y_train, y_test = train_test_split(X_data, y_data, test_size = 0.2, random_state = 2024)

print(X_train.shape, y_train.shape)

print(X_test.shape, y_test.shape)

# torch.Size([1437, 64]) torch.Size([1437]) torch.Size([360, 64]) torch.Size([360])

loader = torch.utils.data.DataLoader(

dataset = list(zip(X_train, y_train)),

batch_size = 64,

shuffle = True,

drop_last = False

)

imgs, labels = next(iter(loader))

fig, axex = plt.subplots(nrows= 8, ncols = 8 , figsize=(14, 14))

for ax, img, label in zip(axex.flatten(), imgs, labels) :

ax.imshow(img.reshape((8,8)), cmap='gray')

ax.set_title(str(label))

ax.axis('off')

model = nn.Sequential(

nn.Linear(64, 10)

)

optimizer = optim.Adam(model.parameters(), lr=0.01)

epochs = 50

for epoch in range(epochs + 1) :

sum_losses = 0

sum_accs = 0

for x_batch, y_batch in loader:

y_pred = model(x_batch)

loss = nn.CrossEntropyLoss()(y_pred, y_batch)

optimizer.zero_grad()

loss.backward()

optimizer.step()

sum_losses += sum_losses + loss

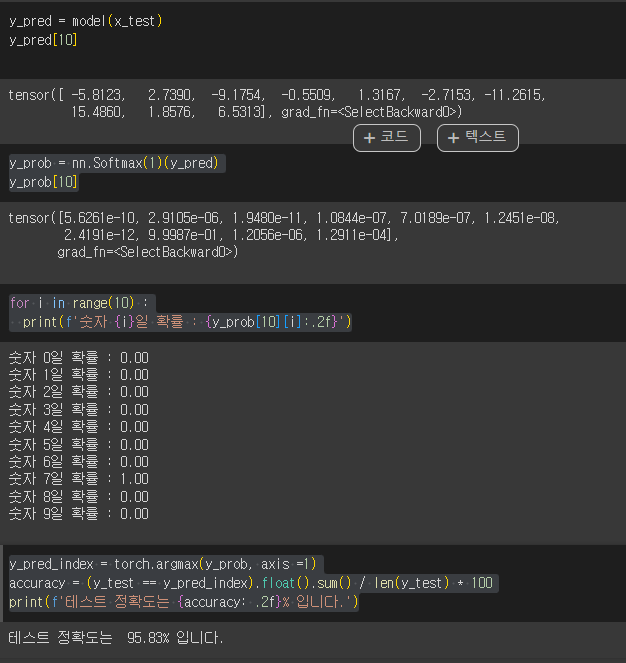

y_prob = nn.Softmax(1)(y_pred)

y_pred_index = torch.argmax(y_prob, axis =1)

acc = (y_batch == y_pred_index).float().sum() / len(y_batch) * 100

sum_accs = sum_accs + acc

avg_loss = sum_losses / len(loader)

avg_acc = sum_accs / len(loader)

print(f'Epoch {epoch:4d}/{epochs} Loss: {avg_loss:.6f} Accuracy : {avg_acc:.2f}%')

'딥러닝과 머신러닝' 카테고리의 다른 글

| Activation Functions, Backpropagaion(2024-06-20) (0) | 2024.06.20 |

|---|---|

| neuron, Perceptron (2024-06-20) (0) | 2024.06.20 |

| Pytorch로 구현한 논리회귀 (2024-06-20) 미완성 (0) | 2024.06.20 |

| Pytorch 선형회귀(2024-06-19) (1) | 2024.06.20 |

| KMean, Silhouette Score (2024-06-18) (0) | 2024.06.18 |